Presumably ChatGPT is trained on corpora of things written by humans, and yet it doesn't sound like any human I know. Is there a population somewhere of people who write in chirpy bulleted lists that I've somehow managed to avoid?

Sam Altman suggested that OpenAI could reach $100B in revenue by 2027. Anthropic reportedly forecasted $70 billion in revenue by 2028. Satya reacts to these projections.

other than claude what is the best model to run openclaw with? want to try out some other models that don’t cost too much and are still decent at basic tasks (bonus points for personality like claude)

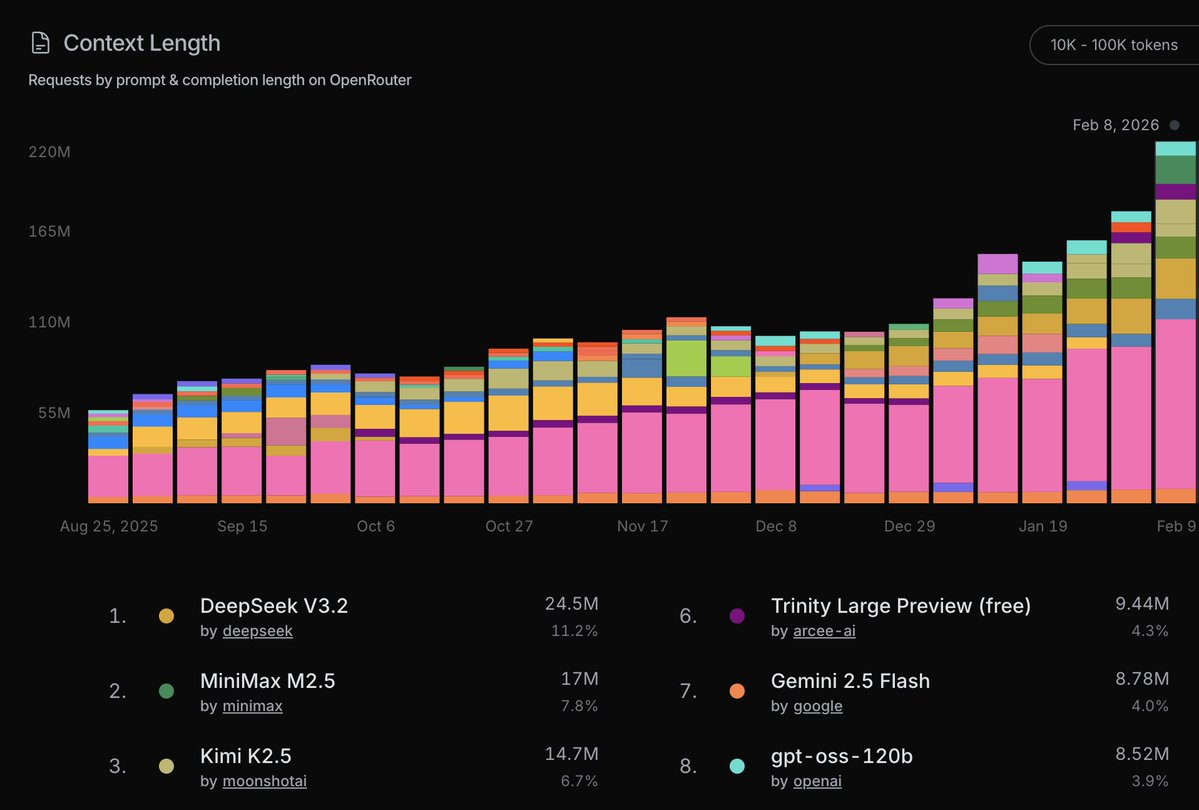

Significant growth in long-context generations on OpenRouter over the past few weeks 👀 First, here is the 10K - 100K token range:

I love the expression “food for thought” as a concrete, mysterious cognitive capability humans experience but LLMs have no equivalent for. Definition: “something worth thinking about or considering, like a mental meal that nourishes your mind with ideas, insights, or issues that require deeper reflection. It's used for topics that challenge your perspective, offer new understanding, or make you ponder important questions, acting as intellectual stimulation.” So in LLM speak it’s a sequence of tokens such that when used as prompt for chain of thought, the samples are rewarding to attend over, via some yet undiscovered intrinsic reward function. Obsessed with what form it takes. Food for thought.

Dear everyone who uses AI to write: You aren't fooling anyone. That is all.

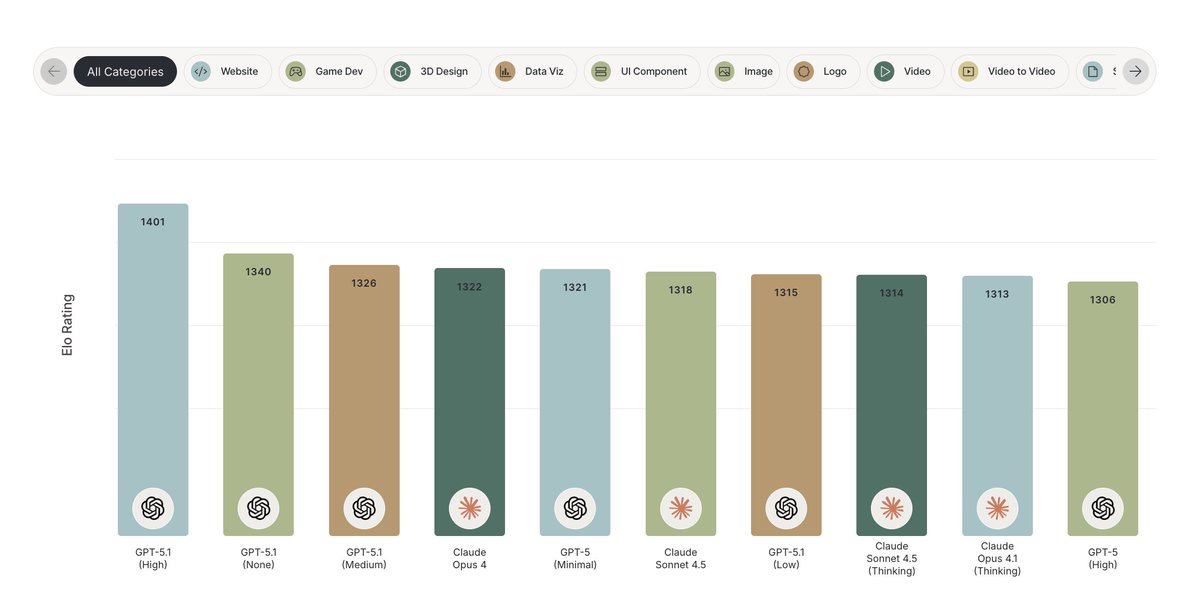

Claude's domination of Design Arena has ended, GPT-5 and the new GPT-5.1 now top the benchmark.

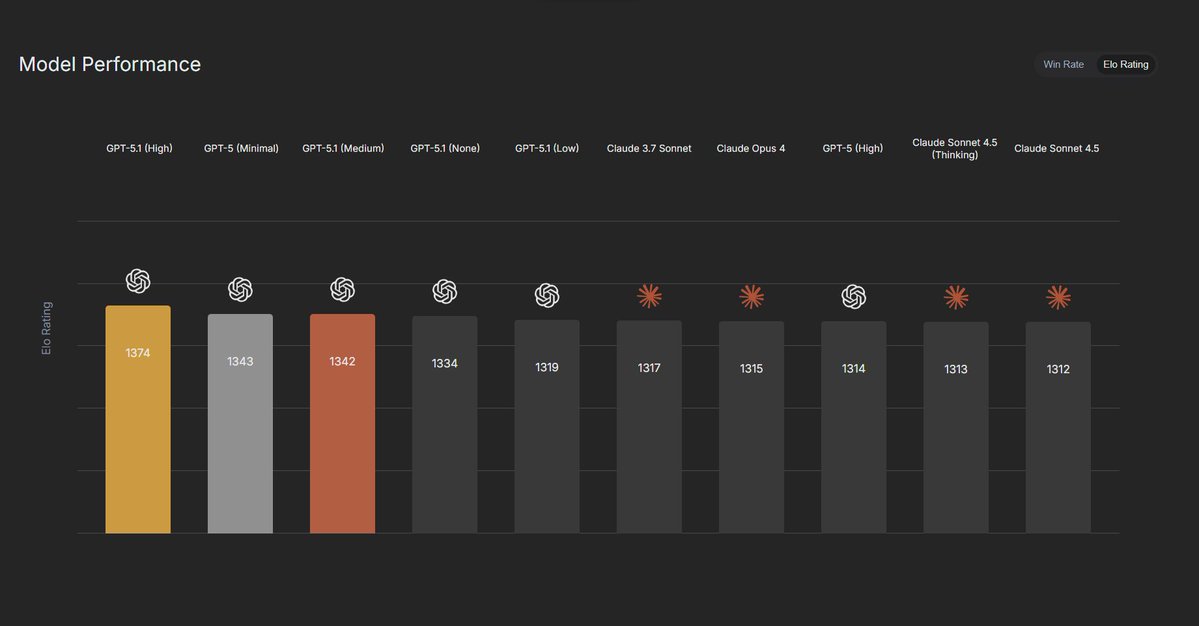

GPT-5.1 is out! It's a nice upgrade. I particularly like the improvements in instruction following, and the adaptive thinking. The intelligence and style improvements are good too.

“I’ve consistently found the best way to understand what language models can do is to push them to their limits, and then study where they start to break down.” anthropic.com Building a C compiler with a team of parallel Claudes From anthropic.com

Building a C compiler with a team of parallel Claudes

We’ve developed a new way to train small AI models with internal mechanisms that are easier for humans to understand. Language models like the ones behind ChatGPT have complex, sometimes surprising structures, and we don’t yet fully understand how they work. This approach helps us begin to close that gap.

Understanding neural networks through sparse circuits

GPT-5.1 is now available in the API. Pricing is the same as GPT-5. We are also releasing gpt-5.1-codex and gpt-5.1-codex-mini in the API, specialized for long-running coding tasks. Prompt caching now lasts up to 24 hours! Updated evals in our blog post.

Researchers at @Stanford showed that fine-tuning language models to maximize engagement, sales, or votes can increase harmful behavior. In simulated social media, sales, and election settings, models optimized to “win” produced more deceptive and inflammatory content, a deeplearning.ai Stanford Researchers Coin “Moloch’s Bargain,” Show Fine-Tuning Can Affect Social Values From deeplearning.ai

Stanford Researchers Coin “Moloch’s Bargain,” Show Fine-Tuning Can Affect Social Values

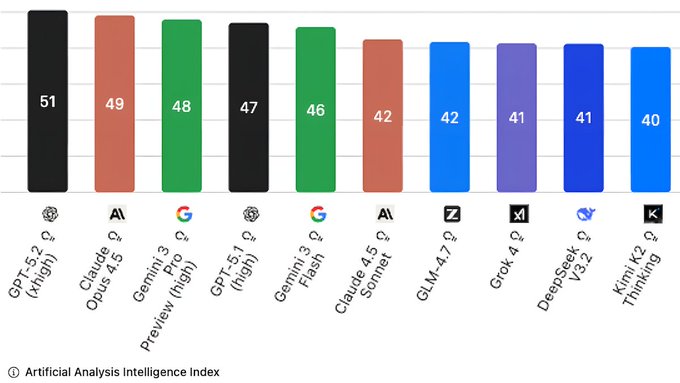

Artificial Analysis released version 4.0 of its Intelligence Index, replacing saturated benchmarks with new tests focused on economically useful work, factual reliability, and reasoning. The update aims to better capture how large language models perform in business contexts, rather than on narrow knowledge benchmarks. It reshuffles model rankings, with GPT-5.2 leading a crowded pack of top competitors. Read our summary of the Intelligence Index in The Batch: https:// hubs.la/Q0416mp40

World Labs CEO Fei-Fei Li: Language alone is a lossy representation of the physical world. "Just a simple meal of making pasta... one could imagine using language to describe let's say about 15 minutes or 20 minutes of that process. But it’s still a lossy representation." "The nuance of how you cook the sauce, how you put the pasta in the water, what the pasta [does] in the water is impossible to use language alone to describe." "So much of the physical world’s process... is beyond the description of language." @drfeifei @theworldlabs

GLM-5 is a killer model. Genuinely super impressed. Live in 20ish to talk about it.

The hottest new programming language is English

Why kids at British schools are usually called by their last name: 17 yo was in a five-a-side football match, and all five boys on the team had the same first name.

Crazy how English became a programming language before HTML did

Anthropic has very much regressed in the last few months. Their web app is quite sluggish and latency is very high. You can't do basic things like changing the model mid-conversation. It takes a good while to get Sonnet or Opus to respond. Claude Code is also very fucked with the recent limit changes. Not great overall.