Product management

Frequently Asked Questions

Even the best developer tools mostly still don't let you sign up for an account via API. This is a big miss in the claude code age because it means that claude can't sign up on its own. Putting all your account management functions in your API should be tablestakes now.

Great meeting with PM @narendramodi today to talk about the incredible energy around AI in India. India is our fastest growing market for codex globally, up 4x in weekly users in the past 2 weeks alone. 🇮🇳!

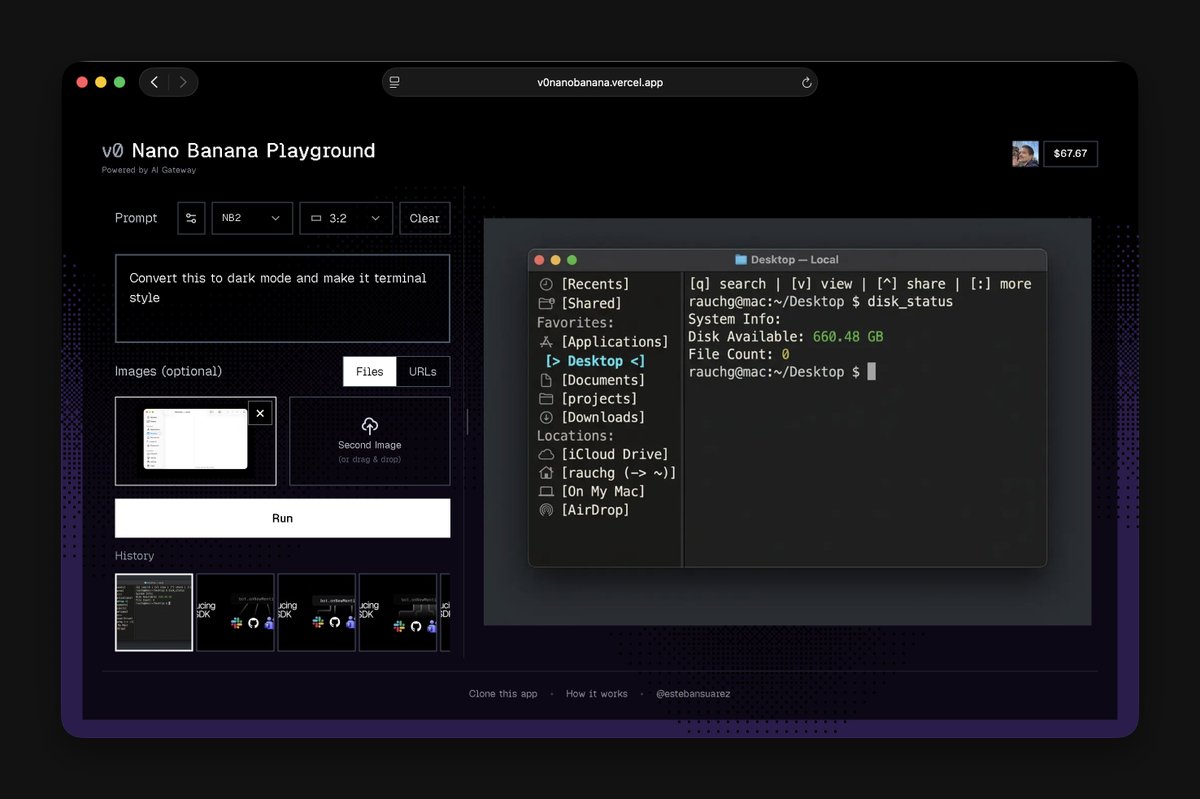

We've upgraded http://v0nanobanana.vercel.app with Nano Banana 2 via @vercel AI Gateway. What's cool about this playground is not just how convenient it is (try pasting, dragging, parallel jobs… it's good.) It's that you pay with your own @vercel AI Wallet. You sign in, then we debit your usage from AI Gateway. In this most recent iteration, you can now top up the Gateway credits directly from the app. The code is available to you so you can make your own apps where users pay for their AI inference.

Meet Kimi K2.5, Open-Source Visual Agentic Intelligence. Global SOTA on Agentic Benchmarks: HLE full set (50.2%), BrowseComp (74.9%) Open-source SOTA on Vision and Coding: MMMU Pro (78.5%), VideoMMMU (86.6%), SWE-bench Verified (76.8%) Code with Taste: turn chats, images & videos into aesthetic websites with expressive motion. Agent Swarm (Beta): self-directed agents working in parallel, at scale. Up to 100 sub-agents, 1,500 tool calls, 4.5× faster compared with single-agent setup. - K2.5 is now live on http://kimi.com in chat mode and agent mode. K2.5 Agent Swarm in beta for high-tier users. For production-grade coding, you can pair K2.5 with Kimi Code: https://kimi.com/code - API: https://platform.moonshot.ai Tech blog: http://kimi.com/blogs/kimi-k2-5.html… Weights & code: https://huggingface.co/moonshotai/Kimi-K……

I'm joining @OpenAI to bring agents to everyone. @OpenClaw is becoming a foundation: open, independent, and just getting started.🦞 https://steipete.me/posts/2026/openclaw

OpenClaw, OpenAI and the future | Peter Steinberger

We’re launching Nano Banana 2, built on the latest Gemini Flash model. 🍌 It’s state-of-the-art for creating and editing images, combining Pro-level capabilities with lightning-fast speed. 🧵

Say hello to Nano Banana 2, our best image generation and editing model! 🍌 You can access Nano Banana 2 through AI Studio and the Gemini API under the name Gemini 3.1 Flash Image. We are also introducing new resolutions (lower cost) and tools like Image Search!

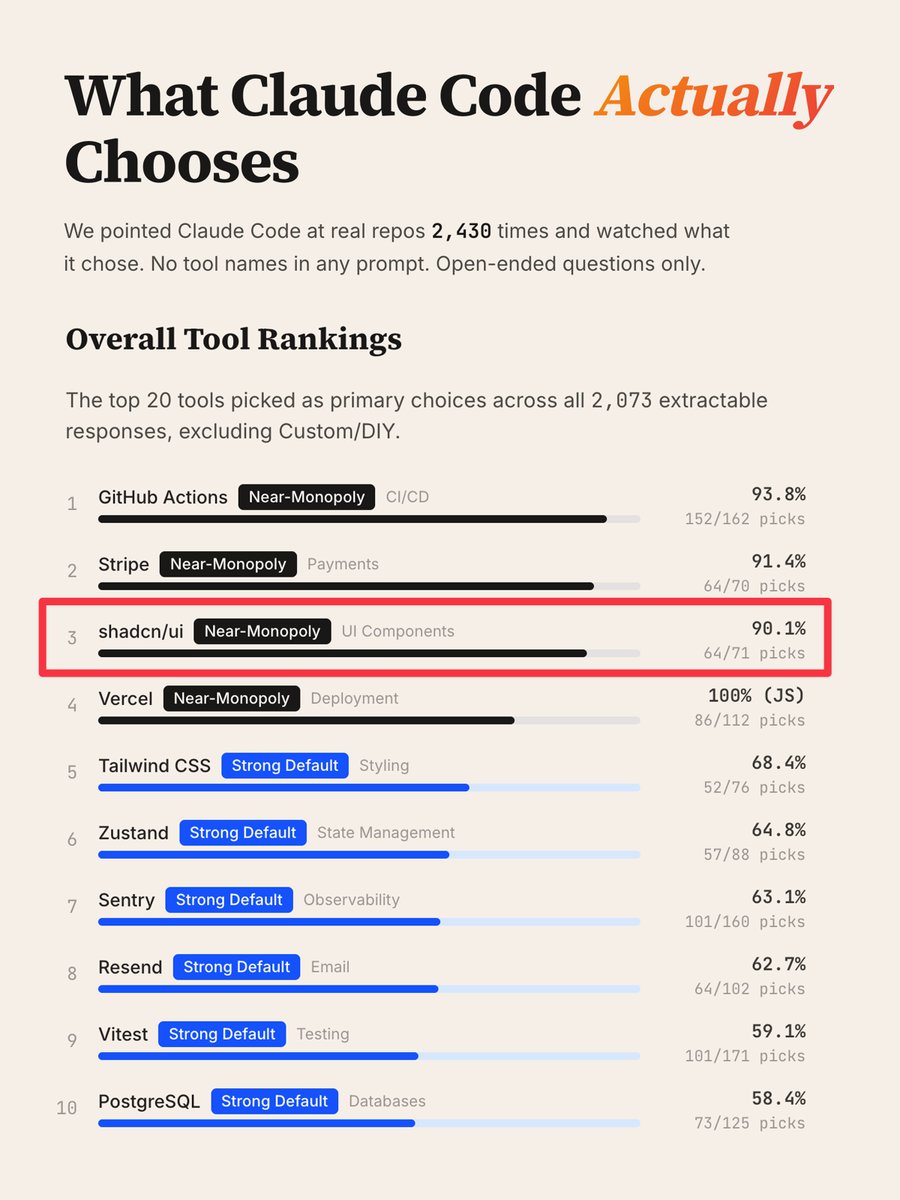

We shipped Claude Code as a research preview a year ago today. Developers have used it to build weekend projects, ship production apps, write code at the world's largest companies, and help plan a Mars rover drive. We built it, and you showed us what it was for.

Slide Revisions are officially 100% rolled out on the mobile app! Because the best ideas (and the most urgent edits) tend to happen when you're nowhere near your computer.

In November, we outlined our approach to deprecating and preserving older Claude models. We noted we were exploring keeping certain models available to the public post-retirement, and giving past models a way to pursue their interests. With Claude Opus 3, we’re doing both.

how to build a bootstrapped startup without funding: 1. pick a problem you personally have. if you don't use your own product daily, quit now 2. skip the pitch deck. open your code editor. ship something ugly in a weekend 3. charge money from day 1. free users give you nothing but support tickets 4. use boring tech. PHP, SQLite, vanilla JS. frameworks are a trap that mass waste your time 5. host on cheap VPS ($5-20/mo). not AWS. you don't need kubernetes for 1,000 users 6. do customer support yourself. it's the fastest product feedback loop that exists 7. automate everything you do more than twice. cron jobs > employees. 8. grow on Twitter/X by building in public. your journey IS the marketing 9. keep your burn rate near zero so you never need to raise. ramen profitable > series A 10. say no to investors, cofounders, and "advisors" who want equity for intros i've been doing this for 10+ years now. no employees, no funding, no board meetings the entire VC game is designed to make you think you need permission to start you don't

If you thought your company's edge was "how fast you ship", you're in for a rude awakening. Everyone can ship fast now. Obviously, not everyone can ship tastefully, with quality and restraint in mind. That's the new edge.

Peter Steinberger is joining OpenAI to drive the next generation of personal agents. He is a genius with a lot of amazing ideas about the future of very smart agents interacting with each other to do very useful things for people. We expect this will quickly become core to our product offerings. OpenClaw will live in a foundation as an open source project that OpenAI will continue to support. The future is going to be extremely multi-agent and it's important to us to support open source as part of that.

Frame․io is falling apart. I wanted something better. Something simple, fast and reliable. I'm excited to introduce lawn․video, an open source video review platform. It flies. This video is not sped up at all. 100 gigs of storage, unlimited seats, $5/month.

New @openclaw beta's up! Again your fav: security, various fixes, I restricted hartbeat in DMs, you screamed, now it's a setting. Slack threads work better. Subagents as well. Telegram webhook is more reliable. https://github.com/openclaw/openclaw/rel…

Releases · openclaw/openclaw