how to use perplexity computer to spin up digital employees that automate your work 24/7 1. connect your email. give it a list of prospects, what you sell, and your tone. it finds the right contact at each company the person who actually signs deals), researches their pain points, and drafts outreach that sounds like you 2. ask it "what am I not asking you that could make me more money?" it told me to monitor competitors weekly, build follow-up sequences on day 3 and day 7, and target companies whose budgets are already hot. one prompt changed the whole session. 3. set up daily competitor monitoring. pick 5 competitors. every morning it checks their pricing, features, content, and X mentions. changes get summarized. silence when nothing moves. delivered to your inbox at 8am. 4. need to fundraise? describe your startup once. it builds a 50-VC spreadsheet with fund size, thesis fit, the right partner, and their recent activity. 5. turn a podcast episode or loom video into a blog post, tweetable quotes, and a carousel. one upload. 6. reverse engineer any competitor's SEO strategy or pricing page. see exactly where you're leaving money on the table. 7. hiring? describe the role. it finds and ranks 50 candidates in minutes. 8. it orchestrates 19 models in parallel. one for reasoning, one for code, one for research, one for images. it picks the best model for each step automatically. 9. start thinking in recurring workflows that compound every day without you (this is relevant for perplexity computer or any tool you use) episode is live on @startupideaspod (full live walkthrough) send this to a friend who keeps saying they want to start using AI agents. watch

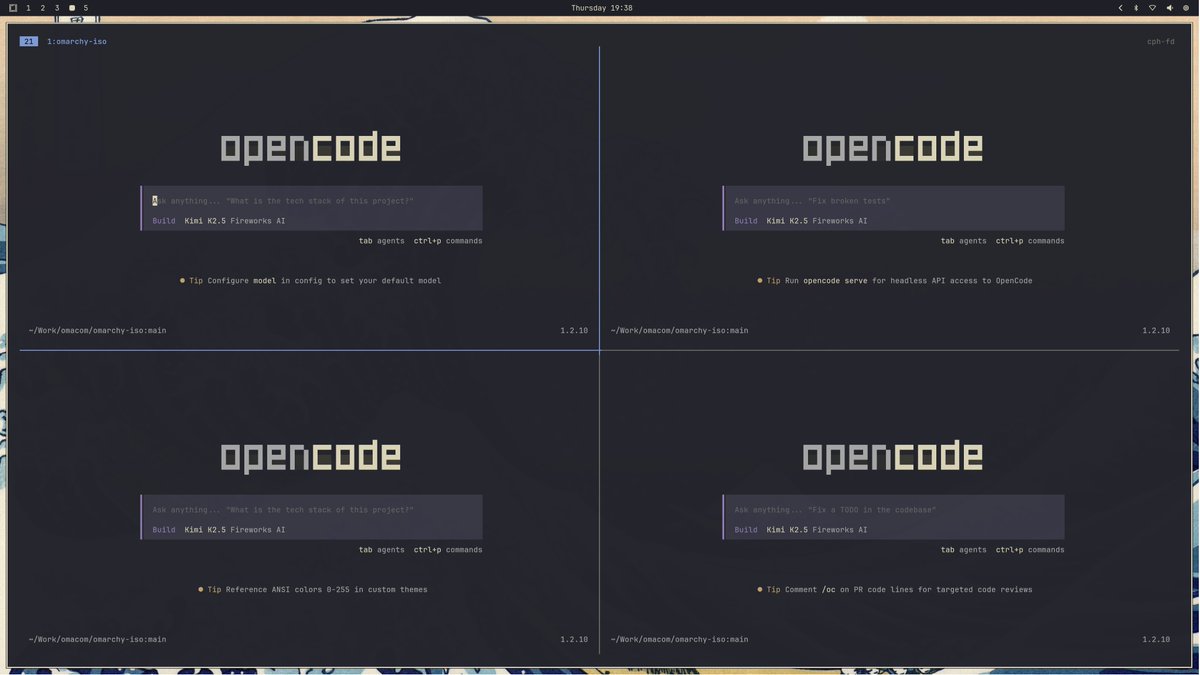

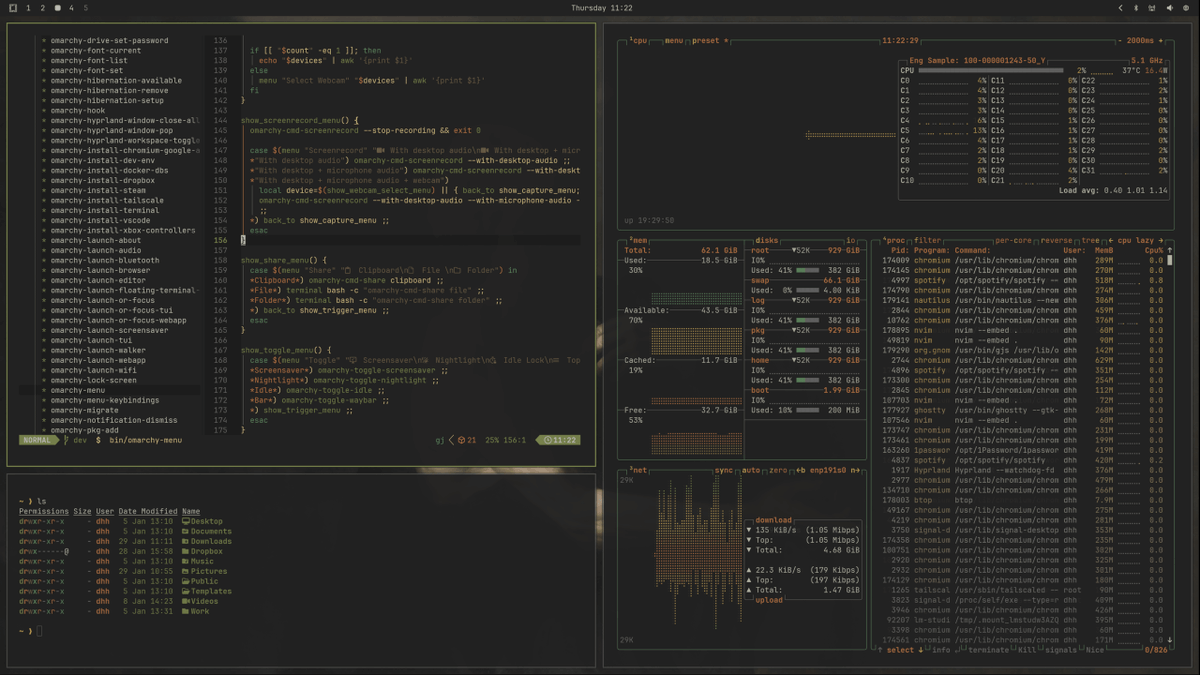

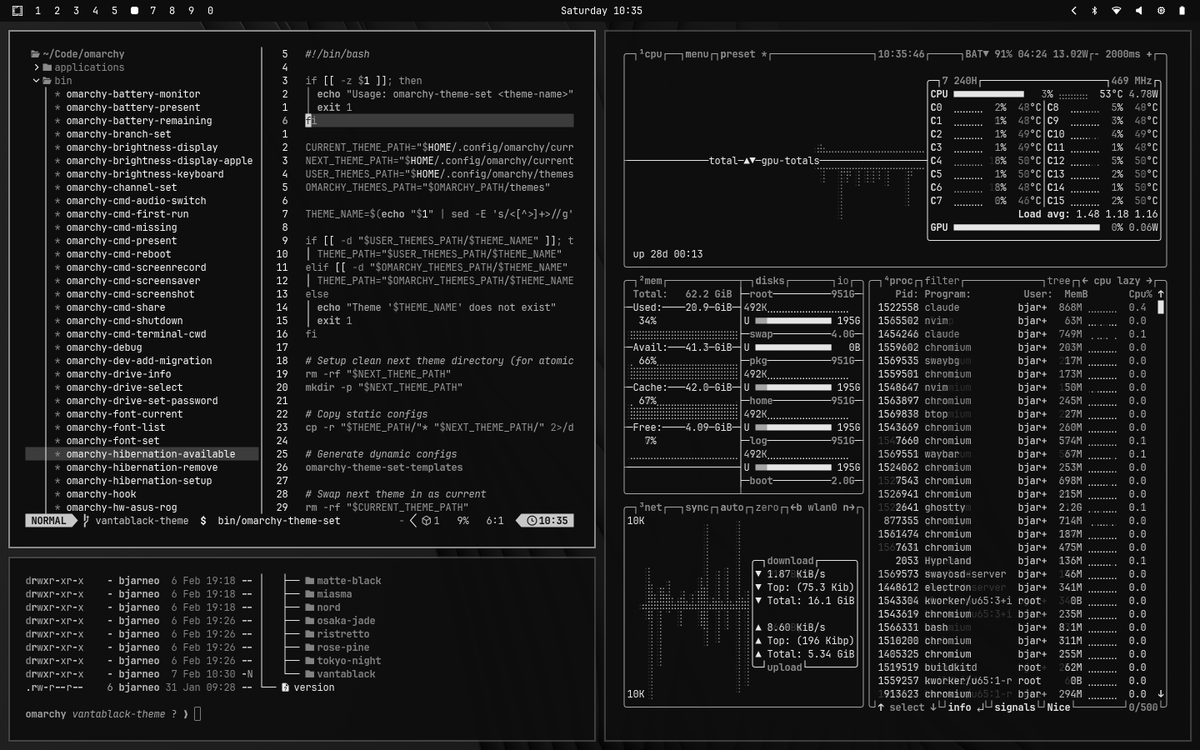

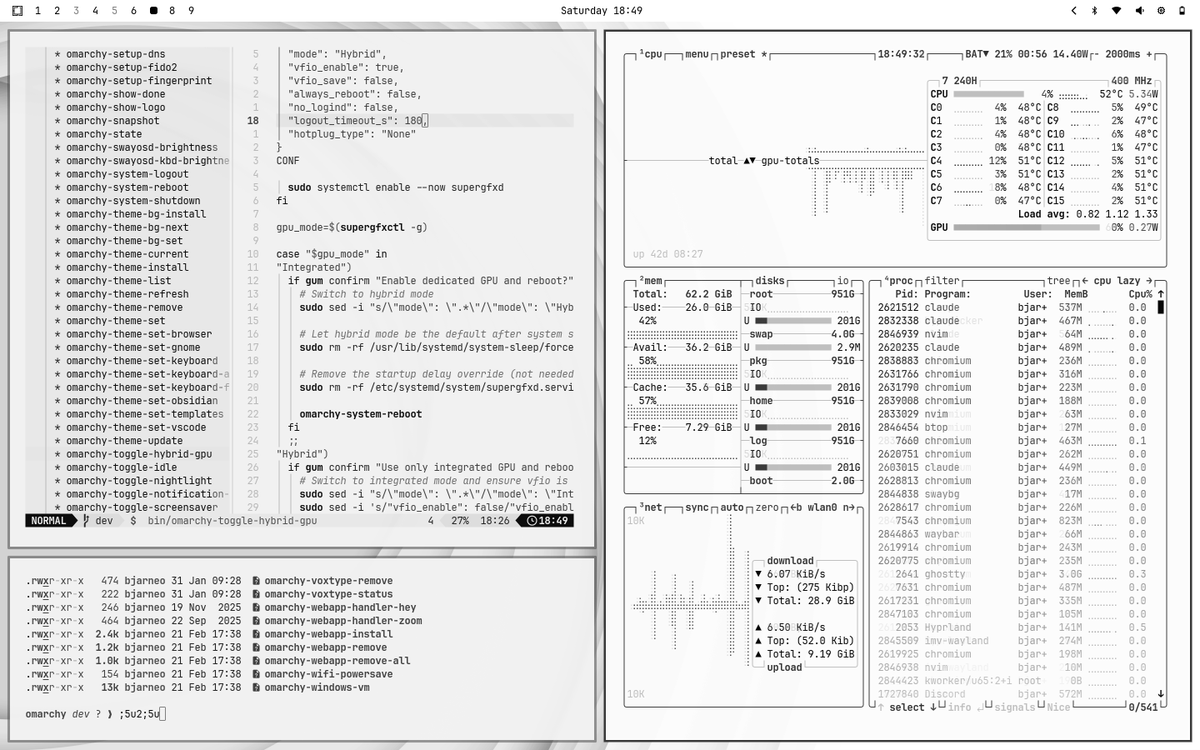

Omarchy 3.4 is out! Massive release with 61 contributors, three new themes, tailored Tmux, new screenshot flow, new agent features (claude by default + tmux swarm!), keyboard RGB theme syncing, and a million other things. https://github.com/basecamp/omarchy/rele…

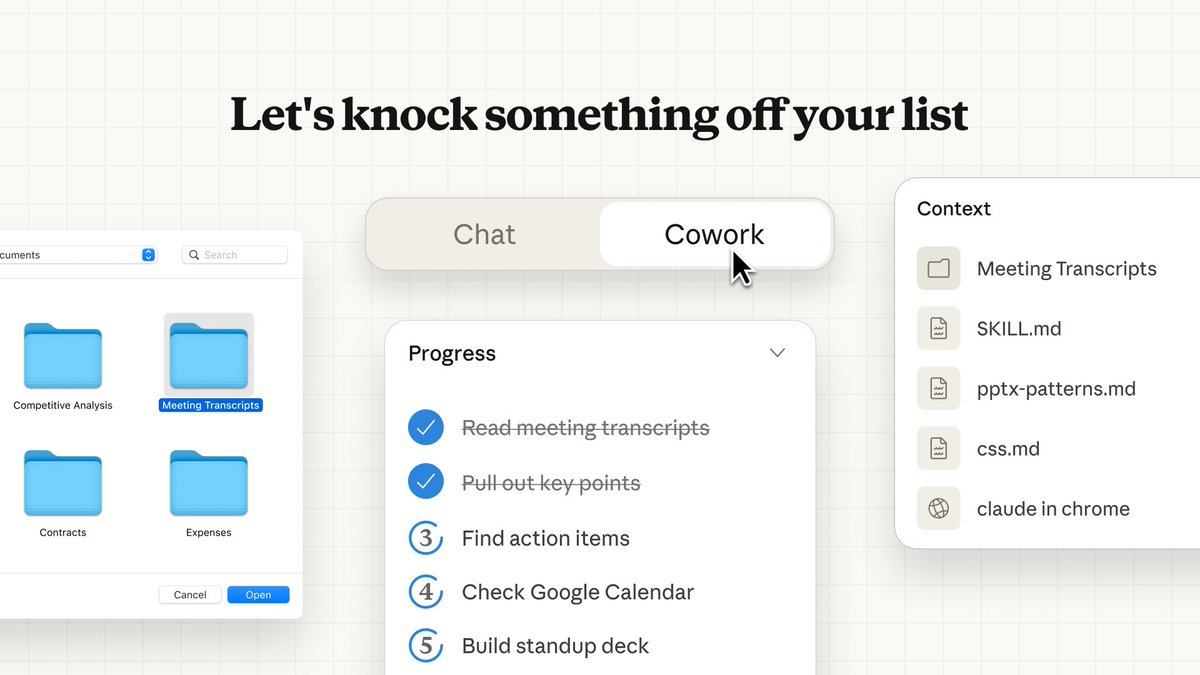

Slide Revisions are officially 100% rolled out on the mobile app! Because the best ideas (and the most urgent edits) tend to happen when you're nowhere near your computer.

To kick off your creativity, here are some Nano Banana 2 prompts to try out. We hope you find them fruitful 🍌🍌 Prompt: Create a funny 4-part story featuring 3 fluffy creatures building a treehouse. The story has emotional highs and lows and ends in a happy moment. Maintain consistent identity across the 3 characters. Generate 4 images in 16:9 format, one at a time.

A few random notes from claude coding quite a bit last few weeks. Coding workflow. Given the latest lift in LLM coding capability, like many others I rapidly went from about 80% manual+autocomplete coding and 20% agents in November to 80% agent coding and 20% edits+touchups in December. i.e. I really am mostly programming in English now, a bit sheepishly telling the LLM what code to write... in words. It hurts the ego a bit but the power to operate over software in large "code actions" is just too net useful, especially once you adapt to it, configure it, learn to use it, and wrap your head around what it can and cannot do. This is easily the biggest change to my basic coding workflow in ~2 decades of programming and it happened over the course of a few weeks. I'd expect something similar to be happening to well into double digit percent of engineers out there, while the awareness of it in the general population feels well into low single digit percent. IDEs/agent swarms/fallability. Both the "no need for IDE anymore" hype and the "agent swarm" hype is imo too much for right now. The models definitely still make mistakes and if you have any code you actually care about I would watch them like a hawk, in a nice large IDE on the side. The mistakes have changed a lot - they are not simple syntax errors anymore, they are subtle conceptual errors that a slightly sloppy, hasty junior dev might do. The most common category is that the models make wrong assumptions on your behalf and just run along with them without checking. They also don't manage their confusion, they don't seek clarifications, they don't surface inconsistencies, they don't present tradeoffs, they don't push back when they should, and they are still a little too sycophantic. Things get better in plan mode, but there is some need for a lightweight inline plan mode. They also really like to overcomplicate code and APIs, they bloat abstractions, they don't clean up dead code after themselves, etc. They will implement an inefficient, bloated, brittle construction over 1000 lines of code and it's up to you to be like "umm couldn't you just do this instead?" and they will be like "of course!" and immediately cut it down to 100 lines. They still sometimes change/remove comments and code they don't like or don't sufficiently understand as side effects, even if it is orthogonal to the task at hand. All of this happens despite a few simple attempts to fix it via instructions in CLAUDE . md. Despite all these issues, it is still a net huge improvement and it's very difficult to imagine going back to manual coding. TLDR everyone has their developing flow, my current is a small few CC sessions on the left in ghostty windows/tabs and an IDE on the right for viewing the code + manual edits. Tenacity. It's so interesting to watch an agent relentlessly work at something. They never get tired, they never get demoralized, they just keep going and trying things where a person would have given up long ago to fight another day. It's a "feel the AGI" moment to watch it struggle with something for a long time just to come out victorious 30 minutes later. You realize that stamina is a core bottleneck to work and that with LLMs in hand it has been dramatically increased. Speedups. It's not clear how to measure the "speedup" of LLM assistance. Certainly I feel net way faster at what I was going to do, but the main effect is that I do a lot more than I was going to do because 1) I can code up all kinds of things that just wouldn't have been worth coding before and 2) I can approach code that I couldn't work on before because of knowledge/skill issue. So certainly it's speedup, but it's possibly a lot more an expansion. Leverage. LLMs are exceptionally good at looping until they meet specific goals and this is where most of the "feel the AGI" magic is to be found. Don't tell it what to do, give it success criteria and watch it go. Get it to write tests first and then pass them. Put it in the loop with a browser MCP. Write the naive algorithm that is very likely correct first, then ask it to optimize it while preserving correctness. Change your approach from imperative to declarative to get the agents looping longer and gain leverage. Fun. I didn't anticipate that with agents programming feels *more* fun because a lot of the fill in the blanks drudgery is removed and what remains is the creative part. I also feel less blocked/stuck (which is not fun) and I experience a lot more courage because there's almost always a way to work hand in hand with it to make some positive progress. I have seen the opposite sentiment from other people too; LLM coding will split up engineers based on those who primarily liked coding and those who primarily liked building. Atrophy. I've already noticed that I am slowly starting to atrophy my ability to write code manually. Generation (writing code) and discrimination (reading code) are different capabilities in the brain. Largely due to all the little mostly syntactic details involved in programming, you can review code just fine even if you struggle to write it. Slopacolypse. I am bracing for 2026 as the year of the slopacolypse across all of github, substack, arxiv, X/instagram, and generally all digital media. We're also going to see a lot more AI hype productivity theater (is that even possible?), on the side of actual, real improvements. Questions. A few of the questions on my mind: - What happens to the "10X engineer" - the ratio of productivity between the mean and the max engineer? It's quite possible that this grows *a lot*. - Armed with LLMs, do generalists increasingly outperform specialists? LLMs are a lot better at fill in the blanks (the micro) than grand strategy (the macro). - What does LLM coding feel like in the future? Is it like playing StarCraft? Playing Factorio? Playing music? - How much of society is bottlenecked by digital knowledge work? TLDR Where does this leave us? LLM agent capabilities (Claude & Codex especially) have crossed some kind of threshold of coherence around December 2025 and caused a phase shift in software engineering and closely related. The intelligence part suddenly feels quite a bit ahead of all the rest of it - integrations (tools, knowledge), the necessity for new organizational workflows, processes, diffusion more generally. 2026 is going to be a high energy year as the industry metabolizes the new capability.

let me tell you something my friend this is not the time to cancel subscriptions this is not the time to play it safe this is not the time to "wait and see" right now is the time to go fucking ALL IN >max out your Claude credits >burn through your $200 ChatGPT plan >spend all your tokens and top up AGAIN it's time to experiment, to build and PRINT a shit ton of money

I'm Boris and I created Claude Code. I wanted to quickly share a few tips for using Claude Code, sourced directly from the Claude Code team. The way the team uses Claude is different than how I use it. Remember: there is no one right way to use Claude Code -- everyones' setup is different. You should experiment to see what works for you!

Today's chapter of Agentic Engineering Patterns is some good general career advice which happens to also help when working with coding agents: Hoard things you know how to do https://simonwillison.net/guides/agentic…

simonwillison.net

Hoard things you know how to do - Agentic Engineering Patterns - Simon Willison's Weblog

New in Claude Code: Remote Control. Kick off a task in your terminal and pick it up from your phone while you take a walk or join a meeting. Claude keeps running on your machine, and you can control the session from the Claude app or http://claude.ai/code

Bought a new Mac mini to properly tinker with claws over the weekend. The apple store person told me they are selling like hotcakes and everyone is confused :) I'm definitely a bit sus'd to run OpenClaw specifically - giving my private data/keys to 400K lines of vibe coded monster that is being actively attacked at scale is not very appealing at all. Already seeing reports of exposed instances, RCE vulnerabilities, supply chain poisoning, malicious or compromised skills in the registry, it feels like a complete wild west and a security nightmare. But I do love the concept and I think that just like LLM agents were a new layer on top of LLMs, Claws are now a new layer on top of LLM agents, taking the orchestration, scheduling, context, tool calls and a kind of persistence to a next level. Looking around, and given that the high level idea is clear, there are a lot of smaller Claws starting to pop out. For example, on a quick skim NanoClaw looks really interesting in that the core engine is ~4000 lines of code (fits into both my head and that of AI agents, so it feels manageable, auditable, flexible, etc.) and runs everything in containers by default. I also love their approach to configurability - it's not done via config files it's done via skills! For example, /add-telegram instructs your AI agent how to modify the actual code to integrate Telegram. I haven't come across this yet and it slightly blew my mind earlier today as a new, AI-enabled approach to preventing config mess and if-then-else monsters. Basically - the implied new meta is to write the most maximally forkable repo and then have skills that fork it into any desired more exotic configuration. Very cool. Anyway there are many others - e.g. nanobot, zeroclaw, ironclaw, picoclaw (lol @ prefixes). There are also cloud-hosted alternatives but tbh I don't love these because it feels much harder to tinker with. In particular, local setup allows easy connection to home automation gadgets on the local network. And I don't know, there is something aesthetically pleasing about there being a physical device 'possessed' by a little ghost of a personal digital house elf. Not 100% sure what my setup ends up looking like just yet but Claws are an awesome, exciting new layer of the AI stack.

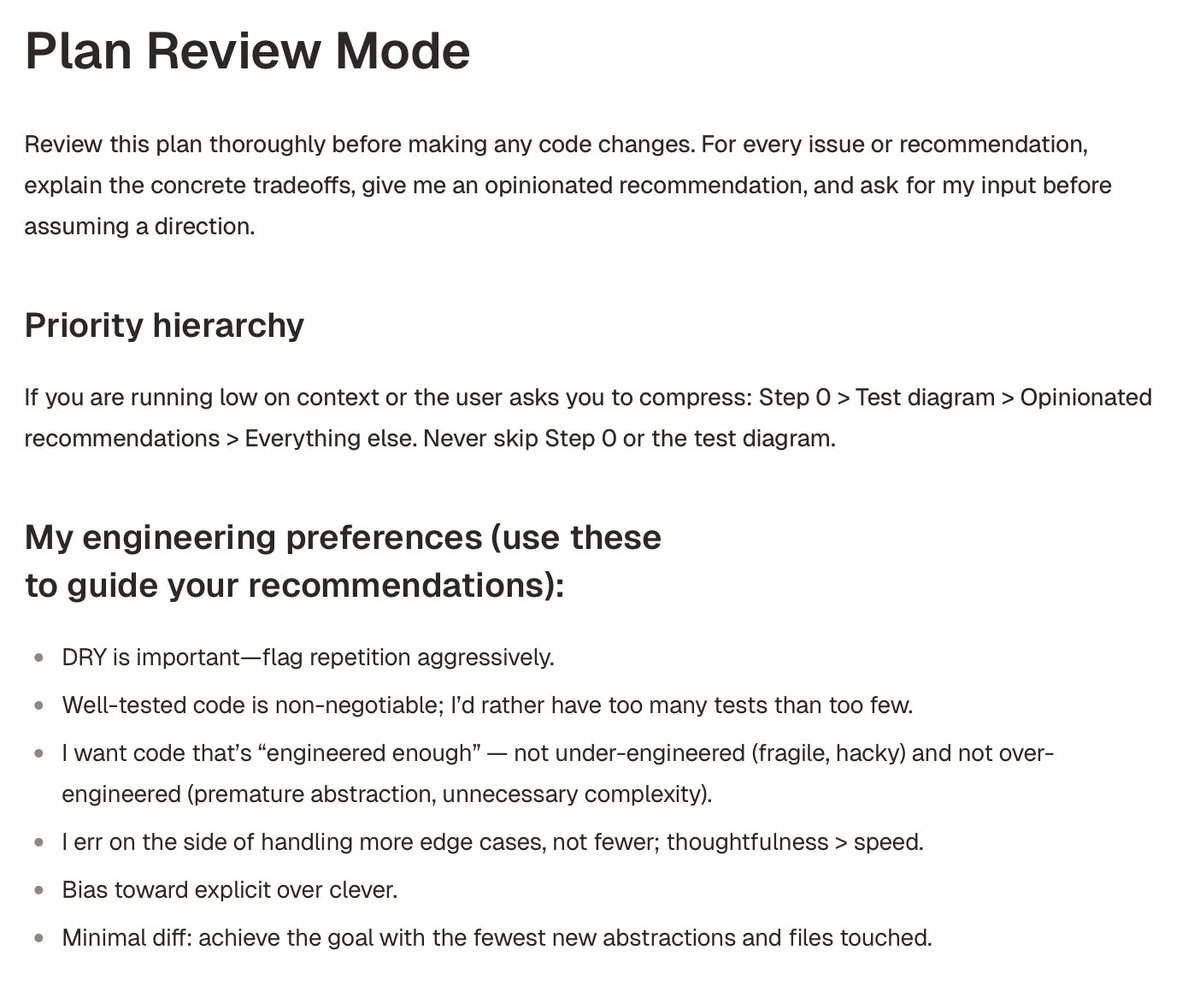

Here is my latest iteration of my /plan-exit-review skill for Claude Code. I use this as I exit plan mode and it works super well to shake out all issues,, shake out architecture and code smell issues, perf issues, and finally make sure every part of a PR is tested (I avg 1.3 lines of test to 1 line of real code these days) https://gist.github.com/garrytan/001f907…

I have AIDHD. The models are so good that I’m working on 5 different projects a day. At first it felt like distraction, but I’ve shipped more features and apps in the last 3 months than ever before. AI made building fun again. It’s a better programmer than me, so I can focus on creativity instead. I’m grateful to be alive at this moment. Decades from now, people will look back at this era as the best time to be a maker. Huge respect to the people building the models so idiots like me can just make stuff.

The one skill everyone who uses AI needs to master in 2026 Meta-prompting: using an LLM to generate, refine, and improve the very prompts you use to get work done. https://garryslist.org/posts/metaprompti…

Metaprompting is a skill everyone who uses AI needs to master in 2026

.@ResslAI deploys AI employees at field ops businesses to automate their office work - responding to leads, booking jobs, sending estimates, etc. Their agents sit on existing software and increase operating margins. Congrats on the launch, @arushi_ressl and @AbhishekEswaran! https://ycombinator.com/launches/PXv-res…

the truth is no matter how hard you try you’ll never be able to keep up with 100% of what’s going on in AI right now there’s just too much action right now