Why LLMs Cannot Play Chess Despite Knowing Notation

Press Space for next Tweet

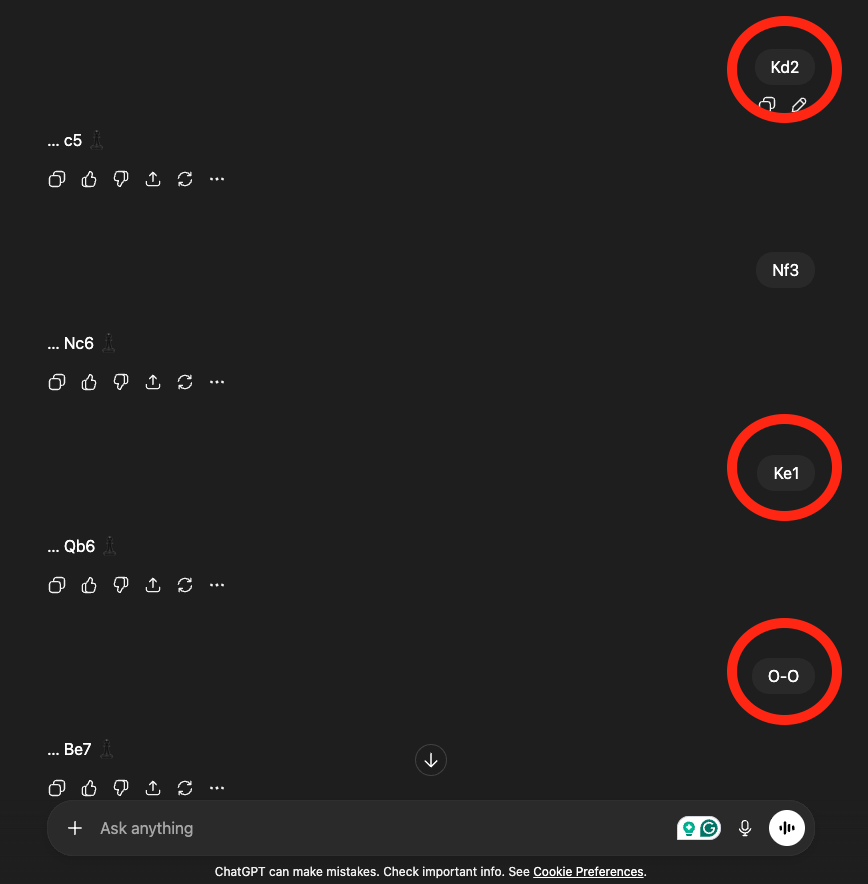

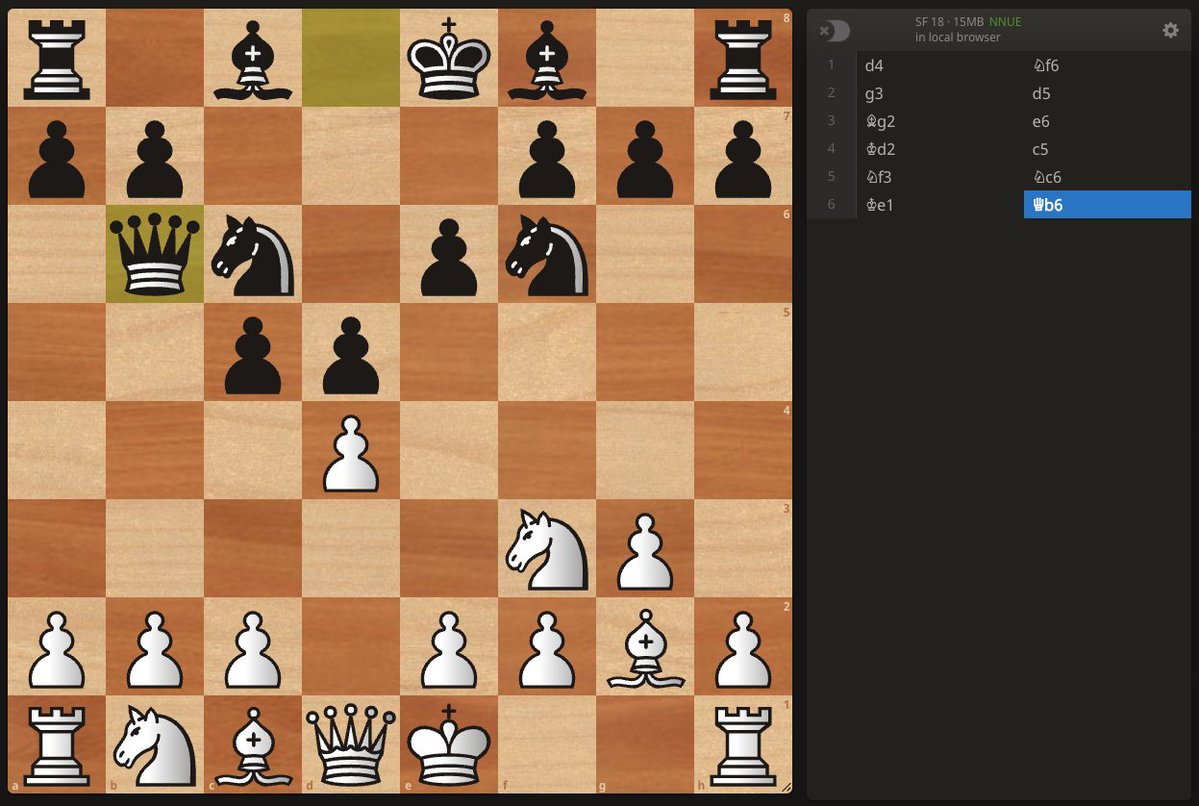

LLMs struggle at chess because it's not a language task. LLMs are trained to predict the next token in a text sequence not to optimize decisions over a structured game state. They embed patterns from chess notation, but they don’t maintain a real board state, enumerate legal moves, or propagate evaluations through a search. Interestingly, if you feed LLM the moves of a famous game like The Opera Game played by Paul Morphy, it may reproduce the continuation perfectly. Because that sequence (PGN) sits cleanly inside the training distribution. For example, in the attached screenshot, I played an illegal castling move and ChatGPT accepted it. Because in that position, castling looks like the statistically common next move in many PGN games. From an LLM perspective, the pattern "develop pieces → castle" has a very high probability. So when you ask an LLM for a move, it’s sampling something plausible given training data instead of actually solving chess. That’s why engines that combine search + evaluation (Stockfish) blow past text-trained models.

Topics

Read the stories that matter.The stories and ideas that actually matter.

Save hours a day in 5 minutesTurn hours of scrolling into a five minute read.